Modeling - whole first

There are two main approaches to creating a system.

- components first, where the interfaces and components are designed first and integrated at the end

- whole first, where the whole is designed first and slowly developed while keeping it alive

In nature, systems are created with the whole first approach like a human body starts with one cell and slowly grows and changes while staying alive.

There has been only one book I know about where biology uses the component first approach, it is about an infamous doctor named Frankenstein and for a very good reason the book can be found in the fiction section of the bookstore.

The reason the information systems that we as programmers build with the component first approach work although barely and with a lot of cost to keep them working is because they are trivial compared to the complexity of biological systems.

If we want to increase the inherent complexity of our information systems we cannot continue with the component first approach.

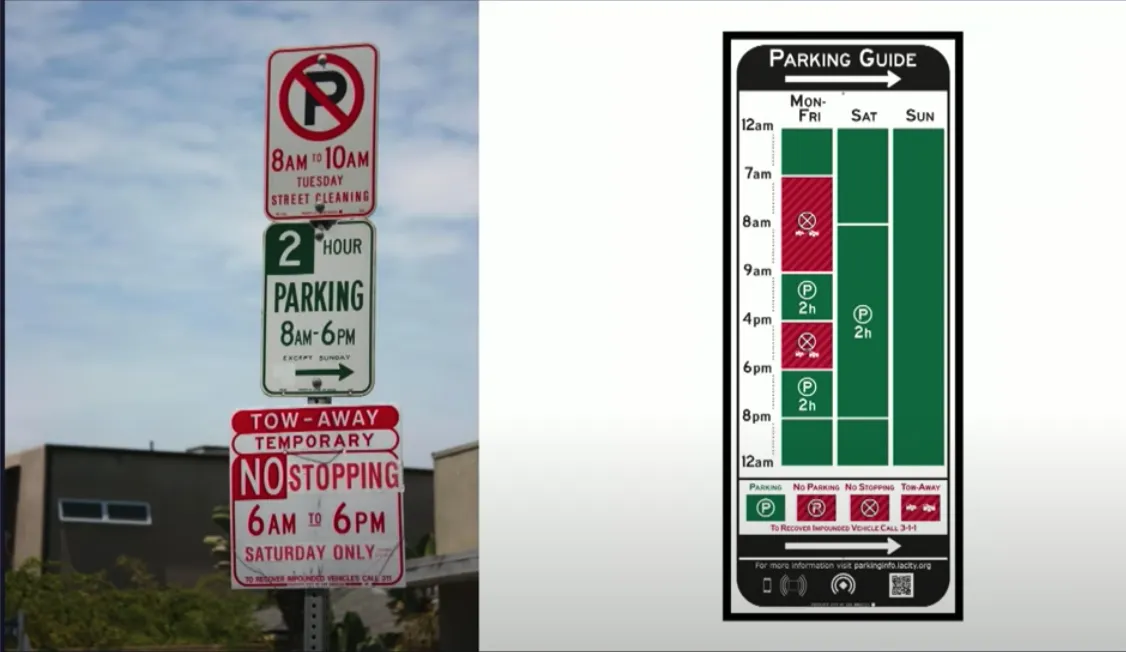

These two images are a good illustration of what you get when following these approaches.

The left image shows components first modeling, it creates a collection of components that seemingly have nothing to do with each other.

The right image, shows whole first modeling, it creates a cohesive whole that is clear and understandable.

The components first modeling is easy to understand locally, one sign at a time, but good luck understanding if you can park there or not.

The left image shows components first modeling, it creates a collection of components that seemingly have nothing to do with each other.

The right image, shows whole first modeling, it creates a cohesive whole that is clear and understandable.

The components first modeling is easy to understand locally, one sign at a time, but good luck understanding if you can park there or not.

Components first modeling

Most of the infrastructure we have in web dev is built with the assumption that we should create systems components first, just glue together a bunch of microservices, or layers like database, cache, specialized index, frontend client, with mismatched interfaces. Or in the small, just glue together a bunch of encapsulated objects.

Whole first modeling in code

In my opinion we should be focusing on the whole first. In the large, I definitely prefer a monolith over microservices, however what we call monoliths, are not at all monolithic, but separate components that are duck taped together because of badly designed interfaces, but I will leave talking about larger scale systems for another blog post. In the small, at code level I will reference this blog post from Casey Muratori, where he contrasts OOP style inheritance, with just simple switch cases, and it is a good example, to illustrate whole first modeling, I will write the code in Java instead of C++.

// focusing on differences first

abstract class SomeType {

public abstract void doSomethingDifferent()

public Whatever doSomethingShared() {

// do shared stuff

return whatever

}

}

class SomeSubType1 extends SomeType {

@Override

public void doSomethingDifferent() {

Whatever whatever = doSomethingShared()

// use shared stuff

// ...

// do different stuff

}

}

class SomeSubType2 extends SomeType {

@Override

public void doSomethingDifferent() {

Whatever whatever = doSomethingShared()

// use shared stuff

// ...

// do different stuff

}

}

// focusing on whole first

public enum SomeType {

SubType1,

SubType2

}

// ...

public static void doSomething() { // doSomething

Whatever whatever = // ...

// do shared stuff

switch (theType) {

case SubType1:

// do different stuff

case SubType2:

// do different stuff

}

// do other shared stuff

}

In the difference-first approach, you define differences in separate places and then bring in shared functionality. In contrast, the shared-first approach centralizes common functionality, allowing differences to branch out. The difference-first approach just scatters logic across multiple files leading to code bloat and sources of bugs due to the need to bring in shared stuff. The more a project matures and the more system like it becomes, the more cross cutting concerns will dominate and the more hoops you will have to jump through to get something done. Take this focus on differences far enough, not just separating it to different classes, but instead to different languages and you get the modern web, with javascript, html, css. I also want to state that dynamic dispatch vs switch case is not the essence of what I am talking about, but in the examples below you can see this shared first vs difference approach seen in different forms. For example the same problem happens with a bunch of nested sum and product types in typed functional languages, it still creates all that unnecessary hierarchical structure that makes the codebase a bureaucratic hell to work in. As I mentioned in Synthetic thinking the more we separate the components of a system the harder it will be for the whole to fulfill its function. Here are some other examples that I've seen on the internet, and come to mind:

- Here Casey is talking to The Primeagen about how to structure his game, and that instead of prematurely separating his data into different parts, just use a big struct, and put everything there.

- In this handmade hero video: Sparse Entity System(If you want full context, watch the whole video, it is worth it) Casey introduces his sparse Entity system, where he just puts all the data for each possible entity into one big struct, he starts with the whole first, and programs like that for a lot of time, until something is worth separating. This kind of code architecture makes code more maintainable because of The law of conservation of coupling

- This great post from Ryan Fleurys UI programming series illustrates how most widgets in the screen share a lot of functionality and instead of creating a proliferation of different widgets, it has one base widget with support for a bunch of functionalities.

- This part of the post from the great 37signals team talking about how in Basecamp, has base model called

Recording, and thing like: todos, comments, chat lines, cards are all derived fromRecording - Here Norbert talks about how in Datomic you can easily link any entity to any other entity, in github you have repos, users, and organizations, and you can just add a reference attribute like

:repo/ownerthat can point to either a user, or an organization. On the other hand in sql you cannot easily link the repo table to either an organization or user. You need a database migration to add a new kind of foreign key. If however the user, and organization, already shared some kind of entity id (like the Recording in the basecamp example), it would be a lot easier to link them.

Whole first modeling for the domain

This is where we can get rid of a lot of of the accidental complexity from the system when building business apps, as much of accidental complexity exists in the model in the head of the person writing the specifications, so if you just directly model that in code from the developers point of view it looks like essential complexity, but viewed globally it is accidental complexity. In the former section I talked about whole first modeling in terms of how to structure the code. In this section I will talk about how to choose abstractions to model from reality with the whole system in mind. Let's say you have a web store and your boss says that he wants you to add a promotional price to some products so that the old price is crossed out and the promotional price is shown. What happens if you directly model that in the database?

--- product table

price INTEGER NON NULL,

promo_price INTEGER NON NULL,

--- ...

--- you will probably need a combined index

CREATE INDEX idx_price product(price, promo_price)

--- ...

--- query for product list page

SELECT

product_id,

--- ...

case when promo_price != 0 and promo_price < price then promo_price else price end as product_price,

--- ...

WHERE product_price < :filter_price_max

AND :filter_price_min < product_price

--- ...

You will need additional logic in all the queries where you want to sort or filter, based on prices.

However if you don't directly model the requirements, but try to find concepts that are more stable across the system and use those as the base model, you can decrease the complexity of your system.

In this case the concepts or map that was directly in the head of the stakeholder was price, and promo_price, but if instead we model, effective_price, and fake_price, where effective_price means the actual price of the item, that will be sold for, and should be used for sorting, and filtering, and fake_price that is shown as the crossed out price in the product page to persuade customers to buy the product.

--- product table

effective_price INTEGER NON NULL,

fake_price INTEGER NON NULL,

--- ...

--- you will probably need a compined index

CREATE INDEX idx_price product(effective_price)

--- ...

--- query for product list page

SELECT

product_id,

--- ...

effective_price,

--- ...

WHERE effective_price < :filter_price_max

AND :filter_price_min < effective_price

--- ...

What happens is that we won't need logic in the query, and only need to index a single column, instead of two.

price and promo_price were not stable across the whole system, we needed to derive a different concept from them in every query, but if we choose to model the concept of effective_price that is stable across the whole system, we ged rid of a lot of unnecessary complexity.

We can do the transformation in the admin panel from the concepts of the user price and promo_price to effective_price and fake_price, or we can talk to the stakeholder and just use effective_price and fake_price in the admin panel and move the complexity completely out of the system.