Modeling - territory first

I already mentioned the quote "The map is not the territory" by Alfred Korzybski in Abstraction in programming. I want to explain how staying as close as possible to the territory when modeling helps with software maintainability. In programming we basically have two territories:

- the physical computer that the software runs on

- the domain from reality that we want to model.

Domain territory

When programming we often think that we are modeling the territory, when in fact we are modeling a map, either our or some other persons map. The issue is that most people's mental models are overfitted to a specific context that doesn’t account for the entire process. If we, as programmers, naively model based on that limited perspective, we’ll be forced to restructure our models as we uncover more of the system beyond the initially assumed context. When programmers talk about premature abstraction or over-engineering, the problem usually is that the initially chosen abstraction was an overfitted, shallow, context dependent one that was too close to the map and maps can often lead you astray. Here is an example: You are building a web store and you model the order table like so:

CREATE TABLE address (

id INTEGER PRIMARY KEY,

--- etc...

)

CREATE TABLE order (

id INTEGER PRIMARY KEY,

total INTEGER,

billing_address INTEGER REFERENCES address (id),

shipping_address INTEGER REFERENCES address (id),

--- etc.....

);

CREATE TABLE order_item (

id INTEGER PRIMARY KEY,

order INTEGER REFERENCES order (id),

product INTEGER REFERENCES product (id),

quantity INTEGER,

--- etc....

);

Later you get new requirements: they want to support shipping different items to different addresses. One solution would be to

- create an

order_shipping_addresstable to group differentorder_items, and addresses to ship them to - to either remove the

shipping_addresscolumn from theordertable, and move everything toorder_shipping_address, or to leave theshipping_addressfor the simple cases, and useorder_shipping_addresstable for the more complicated cases that require multiple shipping addresses - if the modeling is done in a OOP first way with a different subclass for multi shipping address orders and translated to SQL via an ORM, it could be even worse.

CREATE TABLE order_item_shipping_address (

id INTEGER PRIMARY KEY,

order INTEGER REFERENCES order (id),

order_item INTEGER REFERENCES order_item (id),

shipping_address INTEGER REFERENCES address (id),

)

Structural changes like this are not well supported in RDBMSs and cause a lot of friction for their users, that is why there are countless libraries, and even companies that specialize in database migrations. What can we do here? We either leave the naive version and do the restructuring when and if we have to or we do the work upfront and take the risk that it wasn't necessary, this is usually called over-engineering or premature abstraction But there is a third way. We realize that the way we modeled the domain is wrong, and used a subjective, overfitted map as a load bearing abstraction which in this example that was the "order" and move the information to a a different place that is close to the territory. I learned this way of looking at business modeling from Kimball dimensional modeling which is a data modeling technique developed for data warehouses (databases optimized for analytics), however its principles are useful in a much wider range of problems. The principles useful here are:

- modeling the business process instead of nouns

- using the smallest grain as the base

- use dimension and fact tables to model records of business processes

Instead of modeling with the order noun as load bearing abstraction and linking other data to it. We use the smallest grain

line_itemand model the processes.

CREATE TABLE dim__address (

id INTEGER PRIMARY KEY,

--- etc...

)

CREATE TABLE fact__order_line_item (

order_id INTEGER,

product INTEGER REFERENCES product (id),

billing_address INTEGER REFERENCES address (id),

shipping_address INTEGER REFERENCES address (id),

--- etc...

);

Here we put the information on the smallest grain fact__order_line_item and this way changing shipping address for some items is trivial and does not require a database migration. The order_id here is a degenerate dimension, or an id without a table, we don't put information in the order entity. We can have an order entity in the app code, but it should be a derived value and not ground truth.

I find that modeling the territory (processes) instead of the map (objects, nouns, entities) leads to simpler and more stable design.

Physical computer territory

Here are some examples of how choosing abstraction closer to the territory of the computer not only increase performance but simplifies code. Byte Positions Are Better Than Line Numbers - Casey Muratori When a compiler returns an error it usually gives the line number and the character number on that line where it encountered the error. Here, Casey talks about why returning the byte position instead of the line and character number is better. If you want to open an editor to that place where the error occurred:

- if you get the line and character number: you have to parse the file and calculate where that line and column position is in the file

- if you get the byte position: you already know where you have to open the text editor in that file, there is nothing to calculate The best way to increase performance, maintainability and decrease bugs is to not write code that does not need to exist. Turns are Better than Radians is another example of removing code that is just not needed. When you use trigonometric functions in math libraries, that ask for radians, the user usually multiplies the turns with 2pi or tau, but the library gets rid of radians and actually calculates with turns. So there is an extra transform from turns -> radians -> turns just for the trigonometric function interface. The reason trigonometric functions use radians, is because symbolic calculus (derivation, integration) work nice only with radians. The problem is that programming math libraries don't do symbolic calculus, so using radians is an unnecessary complication. There is a lot of good information about writing code that aligns with how computers work: Data oriented design - Mike Acton Data oriented design book - Richard Fabian Data oriented design blog - Richard Fabian Data oriented design resources Performance aware programming course - Casey Muratori

Some notes on Domain Oriented Design (DDD)

There are many aspects to DDD (almost 600 pages), some are good, some are bad. Ideas I consider good from DDD are things like: CQRS, event sourcing , materialized views, which are not particularly new ideas. If you squint a little they are just replicating ideas from databases. However the OOP mindset makes everything overly complicated, for example when things like events are represented as classes in code. Ideas which I consider bad from DDD are things like:

Under domain-driven design, the structure and language of software code (class names, class methods, class variables) should match the business domain. -- DDD wikipedia

This is a bad idea as I explained in Modeling - data first, modeling domain logic in data, instead of programming language concepts like objects, functions, methods leads to hard to maintain and poorly performing code.

Bounded contexts are another bad idea:

"DDD is against the idea of having a single unified model; instead it divides a large system into bounded contexts, each of which have their own model"

Interviewing people in a business and naively copying the overfitted, shallow, context specific maps that are in their head into code. Leads to a bunch of decoupled most probably inconsistent bounded contexts, that are a nightmare to integrate. People are good at handling inconsistency, so having those maps int their heads is not a big problem, because they can easily correct for it, but computers are very bad at dealing with inconsistency. The whole idea with digitizing business processes should be to help the people working in the business understand the whole business better to make it more effective, keeping the decoupled model, they already have and putting it into computers, where they have no way of correcting it, just makes things worse, at least without computers they can correct for inconsistencies. Bureaucracy is bad as it is, there is no need to make it worse with computers.

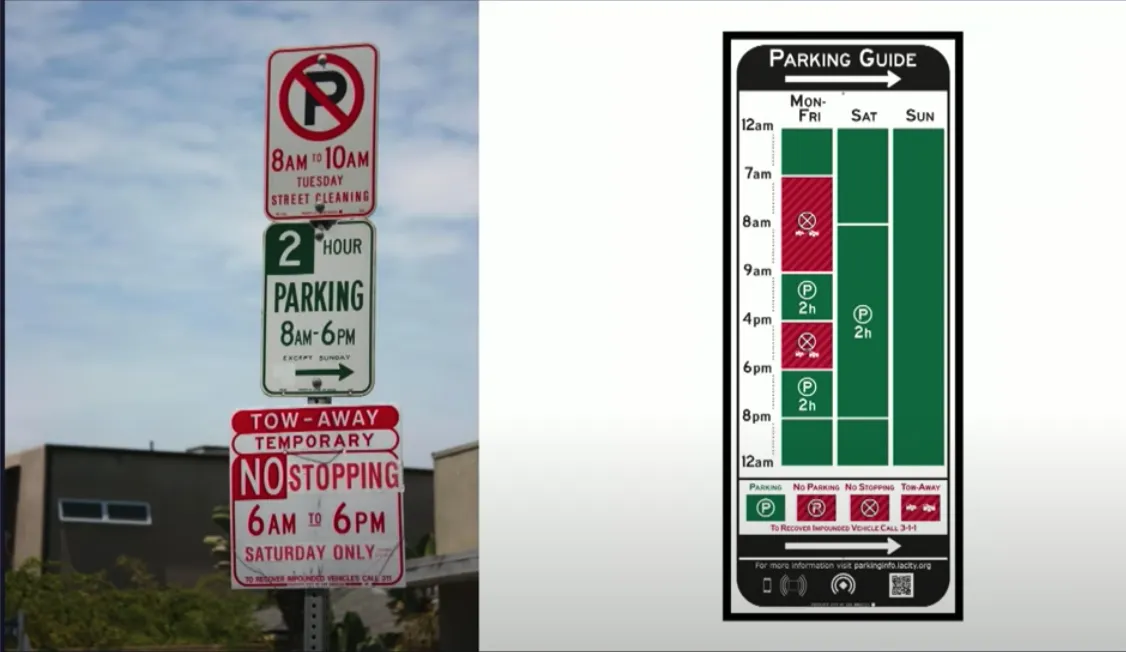

Here is a great image to illustrate what happens with different overfitted, shallow, context dependent, closed maps, like bounded contexts.

Whoever was in charge of the image on the left faithfully followed the open closed principle from SOLID "software entities ... should be open for extension, but closed for modification". One of the marketed features of using the open closed principle is: "Flexibility: Adapts to changing requirements more easily". I have to say that it does adapt more easily to changing requirements and makes change easy, you just slap on another sign and your job is done. Now, will someone who wants to park there actually understand if they can park or not? It doesn't matter, you did your job. The same exact thing happens in enterprise Java or C# code. With image on the right on the other hand, change is slightly harder, depending on how the sign is made, you can either change the tiles in the sign, or have to change the whole sign. But, it is clear and understandable, because they actually did the work of understand the territory better. Here is another good explanation of API design: Casey talks about API design